Annual/Biennial Program Assessment Report

Assessment Report

AY: 2022-2023

College: College of Letters and Science

Department: Mathematical Sciences

Submitted by: Elizabeth Burroughs, Department Head and Ryan Grady, Undergraduate Program

Committe Chair

*Undergraduate Assessment reports are to be submitted annually. The report deadline is October 15th.

*Graduate Assessment reports are to be submitted biennially. The report deadline is October 15th.

Program(s) Assessed:

List all majors (including each option), minors, and certificates that are included in this assessment:

Mathematics (major): Applied Math, Math, Math Teaching, Statistics options

Yes. Have you reviewed the most recent Annual Program Assessment Report submitted and Assessment and Outcomes Committee feedback? (please contact Assistant Provost Deborah Blanchard if you need a copy of either one).

The Assessment Report should contain the following elements, which are outlined in this template and includes additional instructions and information.

- Past Assessment Summary.

- Action Research Question.

- Assessment Plan, Schedule, and Data Source(s).

- What Was Done.

- What Was Learned.

- How We Responded.

- Closing the Loop.

Sample reports and guidance can be found at: https://www.montana.edu/provost/assessment/program_assessment.html

- Past Assessment Summary. Briefly summarize the findings from the last assessment report conducted related to the PLOs being assessed this year. Include any findings that influenced this cycle’s assessment approach. Alternatively, reflect on the program assessment conducted last year, and explain how that impacted or informed any changes made to this cycle’s assessment plan.

Recent assessment has shown that greater than 70% of our students are meeting PLOs as measured by analysis of signature assignments in a range of courses across our major options. We continue to refine our teaching of proof and proving across our options.

Recently we have wondered about the effectiveness of our programs in achieving not only content proficiency, but other aspects of success such as preparation for careers and graduate studies or perception of students regarding the quality of their educational experience. As such, we used a modified assessment model this cycle (as described in Section 4 below).

2. Action Research Question. What question are you seeking to answer in this cycle’s assessment?

What can we learn from students’ perceptions of our programs regarding content, rigor, support, and preparation for future goals?

3. Assessment Plan, Schedule, and Data Source(s).

a) Please provide a multi-year assessment schedule that will show when all program learning outcomes will be assessed, and by what criteria (data)

ASSESSMENT PLANNING CHART

|

PROGRAM LEARNING OUTCOME

|

2020

|

2021

|

2022

|

2023

|

Data Source*

|

|---|---|---|---|---|---|

|

1. Students will demonstrate mathematical reasoning or statistical thinking

|

X

|

|

X

|

|

M 242 Signature Assignment

|

|

2. Students will demonstrate effective mathematical or statistical communication

|

X

|

|

X

|

|

M 242 Signature Assignment

|

|

3. Students will develop a range of appropriate mathematical or statistical methods

for proving, problem solving, and modeling

|

|

X

|

|

X

|

M 384, M 329, and Stat 412 Signature Assignments

|

The Undergraduate Program Committee is responsible for annually assigning a program assessment task force. Members of the task force will be the two most recent faculty members to have taught the course in question; if they are not available, the Department Head will make a suitable alternate appointment. The assessment task force will select the signature assignments from the bank of signature assignments. The bank is initially populated with the signature assignments that have been used in the past five years and will be updated by the committee as necessary, based on results of the assessment.

The task force will determine whether to assess a census of the assignments from Math/Stat Majors/Minors in the course, or whether to assess a random selection. Where possible, a minimum of 10 student assignments should be assessed for each course.

The task force will report the results to the Undergraduate Program Committee and the Department Head, who will distribute it to the department. The first faculty meeting in September will annually be the forum at which the assessment report is discussed and action recommended.

b) What are the threshold values for which your program demonstrates student achievement?

THRESHOLD VALUES

|

PROGRAM LEARNING OUTCOME

|

Threshold Value

|

Data Source*

|

|---|---|---|

|

1. Students will demonstrate mathematical reasoning or statistical thinking. |

The threshold value for this outcome is for 70% of assessed students to score acceptable or proficient on the scoring rubric. |

M 242 Signature Assignment |

|

2. Students will demonstrate effective mathematical or statistical communication. |

The threshold value for this outcome is for 70% of assessed students to score acceptable or proficient on the scoring rubric. |

M 242 Signature Assignment |

|

3. Students will develop a range of appropriate mathematical or statistical methods for proving, problem solving, and modeling. |

The threshold value for this outcome is for 70% of assessed students to score acceptable or proficient on the scoring rubric. |

M 384, M 329, and Stat 412 Signature Assignments |

4. What Was Done.

a) Was the completed assessment consistent with the program’s assessment plan? If not, please explain the adjustments that were made.

____ Yes _X_ No

Through recent assessments, we have established that our undergraduate programs effectively equip students to achieve our PLOs for mathematical reasoning, statistical thinking, discipline-specific communication, proving, problem solving, and modeling. In other words, we can confidently document student proficiency in our subject matter. Recently we have wondered about the effectiveness of our programs in achieving not only content proficiency, but other aspects of success such as preparation for careers and graduate studies or perception of students regarding the quality of their educational experience.

This year we have implemented a pilot Program Assessment in Spring 2023, designed as an exit survey for program graduates. This experiment is a first step in answering our action reserach question: What can we learn from students’ perceptions of our programs regarding content, rigor, support, and preparation for future goals? We feel this provides new insight into PLO3 in particular.

b) How were data collected and analyzed and by whom? Please include method of collection and sample size.

In the spring of 2023 a Qualtrics survey was distributed to undergraduate students graduating in one of the department’s four major options. The responses were aggregated in Qualtrics before being exported for review by the department’s Undergraduate Program Committee.

The survey responses collected in Spring 2023 started with 19 responses. For the metrics we are interested in for the program assessment, n = 12 provided at least partial answers. Preparation for graduate school and chosen field retain missing responses (4 for grad programs and 3 for chosen field). Some of that missingness could be because they were not planning to go in one or other directions so chose to not respond.

These questions were a combination of radio button and Likert scale responses with options from (1) Very Ineffective to (5) Very Effective. There were also open response questions related to strengths and areas for improvement in the department.

|

Field

|

Min

|

Max

|

Mean

|

Standard Deviation |

Variance

|

Responses

|

|---|---|---|---|---|---|---|

| The ability to analyze quantitative problems? | 4.00 | 5.00 | 4.58 | 0.49 | 0.24 | 12.00 |

| The ability to apply computational tools to quantitative problems? | 3.00 | 5.00 | 4.50 | 0.76 | 0.58 | 12.00 |

|

Field

|

Min

|

Max

|

Mean

|

Standard Deviation |

Variance

|

Responses

|

|---|---|---|---|---|---|---|

|

...applying to graduate programs?

|

1.00

|

5.00

|

3.75

|

1.30

|

1.69

|

8.00

|

|

...applying to jobs in your chosen field?

|

2.00

|

5.00

|

3.56

|

1.34

|

1.80

|

9.00

|

c) Please provide a rubric that demonstrates how your data were evaluated.

For the response data used in this cycle, the rubric was embedded as the Likert scale responses to survey questions.

Additionally, the Undergraduate Program Committee has developed a rough rubric for the process of collection and analysis of survey data. In particular, a response rate of 70% or greater was desired.

5. What Was Learned.

a) Based on the analysis of the data, and compared to the threshold values established, what was learned from the assessment?

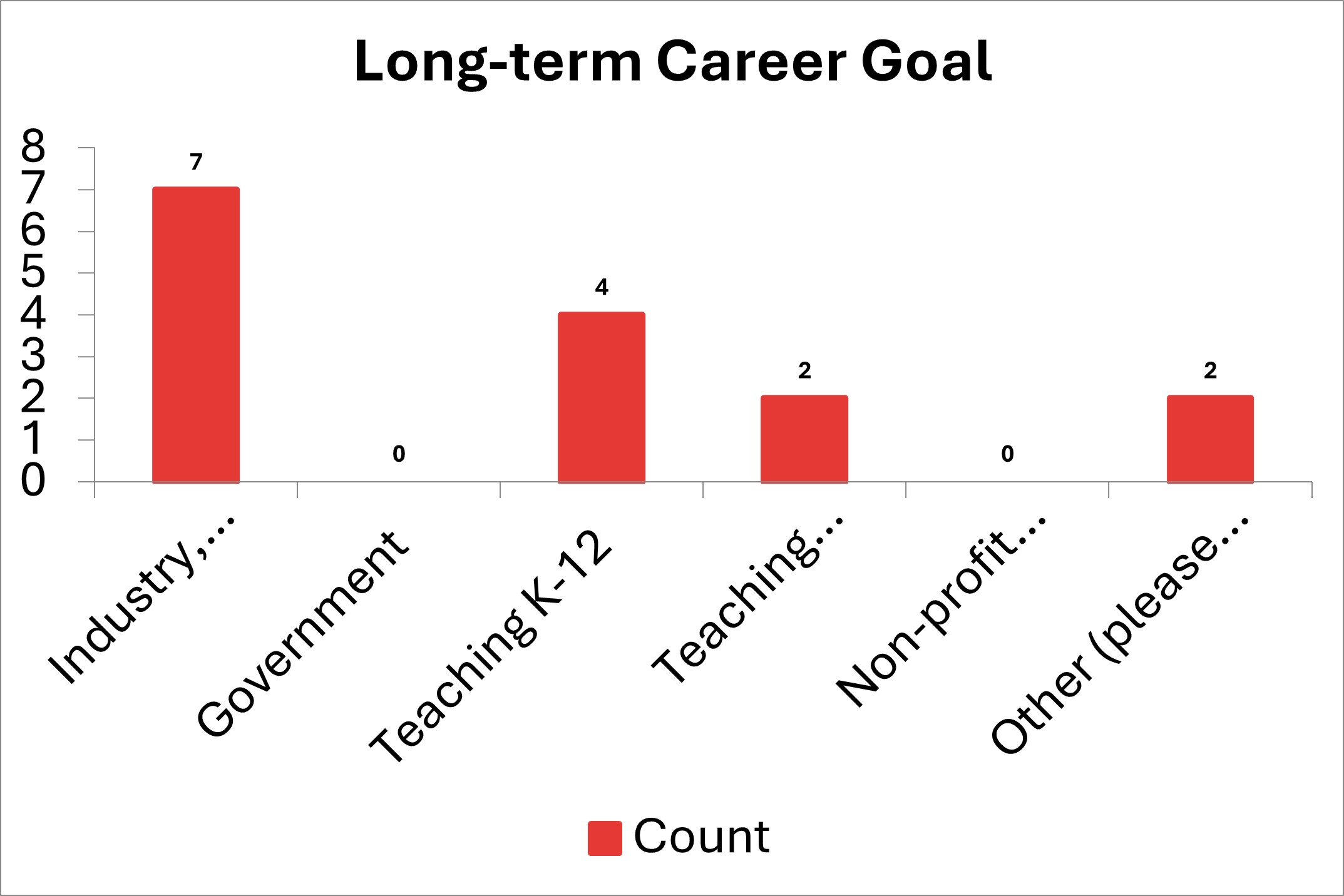

The analysis of survey data shed light on the students’ perception of their development and preparation for further study and/or entry into the workforce. Further, the responses gave valuable insight into long-term career goals, and participation in student groups/activities, e.g., math club.

b) What areas of strength in the program were identified from this assessment process?

- To the students, the undergraduate program did a good job of empowering them to be effective users of mathematics and statistics to formulate and solve a wide array of problems.

- Students felt that faculty---at both the TT and NTT---level were very engaged and invested in their education journey.

- Students appreciated that the theme of modeling was a consistent thread throughout our undergraduate course sequences.

c) What areas were identified that either need improvement or could be improved in a different way from this assessment process?

- Undergraduate advisors could be more clear in articulating the differences and unique assets between the mathematics and applied mathematics options.

- Some students didn’t feel that they had ample resources/knowledge in applying for graduate school and/or jobs.

- Undergraduate participation in math club, modeling competitions, and undergraduate research was low for the present cohort.

6. How We Responded.

a) Describe how "What Was Learned" was communicated to the department, or program faculty. How did faculty discussions re-imagine new ways prgram assessment might contribute to program growth/improvement/innovation beyond the bare minium of achieving program learning objectives through assessment activities conducted at the course level?

A summary report of survey responses was communicated to faculty. Moreover, undergraduate advising was a topic (including lessons from the survey data) for the department’s October faculty meeting.

b) How are the results of this assessment informing changes to enhance student learning in the program?

These data do not suggest that major changes are needed to the assessed curriculum.

This cycle has suggested the utility of student survey data at the time of graduation. As such, the Undergraduate Program Committee will recommend including survey data in program assessment with frequency every other year. Further, this committee will develop a more detailed rubric to more explicitly connect the student responses with department PLOs.

c) If information outside of this assessment is informing programmatic change, please describe that.

Not at this time.

d) What support and resources (e.g. workshops, training, etc.) might you need to make these adjustments?

Not at this time.

7. Closing the Loop(s). Reflect on the program learning outcomes, how they were assessed in the previous cycle (refer to #1 of the report), and what was learned in this cycle. What action will be taken to improve student learning objectives going forward?

a) In reviewing the last report that assessed the PLO(s) in this assessment cycle, what changes proposed were implemented and will be measured in future assessment reports?

Our 2021-22 report indicated that M 384 would attend to more intricate arguments, M 329 would focus on mathematical knowledge for teaching about proof, and STAT 412 would focus on interactions in models. Faculty are working on those aims in the current year.

b) Have you seen a change in student learning based on other program adjustments made in the past? Please describe the adjustments made and subsequent changes in student learning.

Our prior report indicates that we would maintain a commitment to the more advanced learning goals in our courses, and we continue to discuss opportunities for this in our faculty discussions.